ChatGPT has sparked a creative revolution in artificial intelligence (AI) that’s truly groundbreaking. In just a year, this language model has impressed experts with its writing abilities, adept handling of complex tasks, and surprisingly user-friendly interface. Yet, there’s more beneath the surface. ChatGPT has sparked a surge of creativity, enabling individuals to produce content that was once deemed unimaginable.

The possibilities with ChatGPT and similar language models (LMs) are practically limitless. The key lies in one crucial factor: crafting the perfect set of input instructions, called prompts, that unlock their true potential across a vast spectrum of tasks. Think of it as the secret language that taps into the AI’s inner brilliance, guiding it to perform miracles with words. This idea of crafting prompts to get the optimal results out of led to a new field known as Prompt Engineering.

What’s Prompt Engineering?

Prompt engineering is the practice of skillfully designing or crafting inputs (known as prompts) for generative AI language models like GPT-3, GPT-4, and similar large language models to produce optimal outputs.

You can think of it as giving AI the perfect recipe for success. You provide the ingredients (the prompt), and the AI does the cooking (generates the output). The aim is to carefully shape prompts to achieve the best possible results from these sophisticated language models.

Much like how we improve with practice, prompt engineering is about experimenting with the wording, structure, and format of prompts. This tweaking influences the behavior of the model and helps generate responses that are specific and contextually relevant.

While artificial intelligence has been in existence since the late 1950s and early 1960s, prompt engineering is a relatively recent and evolving field. Acquiring skills in prompt engineering is essential for grasping the strengths and limitations of large language models (LLMs).

Prompt engineering proves particularly valuable in applications requiring fine-tuning or customization of the model’s behavior. Users can experiment with and refine prompts based on the model’s responses to achieve their desired outcomes. The effectiveness of prompts varies depending on the specific model in use. Researchers and practitioners often engage in trial-and-error and experimentation to identify the optimal prompts for different tasks or applications.

Understanding Prompt Engineering:

Envision ChatGPT as a potent engine awaiting the right combination of fuel and direction to operating at its peak efficiency. In this analogy, the prompt functions as both the fuel and the compass, providing the necessary instructions for ChatGPT to execute a task. Whether it’s a straightforward question, a creatively framed prompt, or a multifaceted set of guidelines, the prompt sets the stage for the AI’s performance.

For example, Researchers use prompt engineering to enhance the capabilities of LLMs across various common and complex tasks, such as question-answering and arithmetic reasoning. Developers employ prompt engineering to create robust and effective techniques for interacting with LLMs and other tools.

However, prompt engineering goes beyond just creating prompts. It encompasses a diverse set of skills and techniques useful for interacting with and developing LLMs. It’s a critical skill for interfacing, building with, and comprehending the capabilities of LLMs. Prompt engineering can contribute to improving the safety of LLMs and introducing new capabilities, such as augmenting LLMs with domain knowledge and external tools.

How to Facilitate Prompt Engineering:

- Specificity: The precision of your prompt directly correlates with the specificity of the AI’s response. Adjusting the phrasing, incorporating context, and offering examples serve as navigational tools, steering ChatGPT toward a more focused and tailored output.

- Control: Crafting the prompt allows you to influence the style, tone, and format of the AI-generated content. Whether you seek a poetic piece or a factual summary, the prompt serves as a directive, shaping the outcome according to your preferences.

- Creativity: Prompt engineering unlocks ChatGPT’s capacity to generate diverse creative formats, spanning poems, code, scripts, musical compositions, emails, and letters. The more nuanced and detailed the prompt, the more creative and varied the AI’s output.

- Efficiency: By investing time in refining your prompt, you can save substantial effort and time compared to experimenting with various approaches iteratively. A well-crafted prompt streamlines the AI’s understanding, resulting in more efficient and accurate responses.

Illustrative Examples:

- Simple Prompt: “Write a poem about a robot falling in love with a human.”

- Specific Prompt: “Compose a sonnet in iambic pentameter, exploring themes of loneliness and isolation in a futuristic cyberpunk setting.”

- Instructional Prompt: “Generate a script for a short comedy skit between two office workers, focusing on the absurdity of corporate jargon.”

Benefits of Prompt Engineering:

- Unlock Full Potential: Direct ChatGPT to fulfill your specific objectives, rather than relying on its interpretation.

- Boost Productivity: Save time and effort by formulating a well-defined prompt from the outset.

- Enhance Creativity: Prompt engineering facilitates the creation of original and unique content across various formats.

- Improve Communication: Interact more effectively with ChatGPT to achieve desired results.

Getting Started with Prompt Engineering:

- Explore Examples and Tutorials: Familiarize yourself with successful prompt strategies online.

- Experiment: Test different prompts to discover what yields the best results.

- Embrace Creativity: Don’t shy away from injecting creativity into your prompts.

In essence, mastering the art of prompt engineering empowers users to unleash ChatGPT’s potential, transforming it into a valuable tool for an array of tasks—ranging from creative writing and information gathering to simply enjoying engaging interactions with AI.

To dive into the world of prompt engineering, OpenAI has rolled out a set of guides offering strategies and tactics to enhance the performance of large language models, including the likes of GPT-4. The OpenAI Prompt Engineering Guide lays out methods that can sometimes be combined for an even more impactful outcome, and OpenAI encourages users to experiment and discover the approaches that suit them best.

It’s worth noting that some of the examples in the OpenAI Prompt Engineering Guide currently function exclusively with its most advanced model, GPT-4. In general, if you encounter a situation where a model falls short on a task, OpenAI recommends you try a more advanced model.

Prompt Engineering Strategies and Tactics

Six strategies for getting better results

Write clear instructions

These models can’t read your mind. If outputs are too long, ask for brief replies. If outputs are too simple, ask for expert-level writing. If you dislike the format, demonstrate the format you’d like to see. The less the model has to guess at what you want, the more likely you’ll get it.

Tactics:

- Include details in your query to get more relevant answers

- Ask the model to adopt a persona

- Use delimiters to clearly indicate distinct parts of the input

- Specify the steps required to complete a task

- Provide examples

- Specify the desired length of the output

Provide reference text

Language models can confidently invent fake answers, especially when asked about esoteric topics or for citations and URLs. In the same way that a sheet of notes can help a student do better on a test, providing reference text to these models can help in answering with fewer fabrications.

Tactics:

- Instruct the model to answer using a reference text

- Instruct the model to answer with citations from a reference text

Split complex tasks into simpler subtasks

Just as it is good practice in software engineering to decompose a complex system into a set of modular components, the same is true of tasks submitted to a language model. Complex tasks tend to have higher error rates than simpler tasks. Furthermore, complex tasks can often be re-defined as a workflow of simpler tasks in which the outputs of earlier tasks are used to construct the inputs to later tasks.

Tactics:

- Use intent classification to identify the most relevant instructions for a user query

- For dialogue applications that require very long conversations, summarize or filter previous dialogue

- Summarize long documents piecewise and construct a full summary recursively

Give the model time to “think”

If asked to multiply 17 by 28, you might not know it instantly, but can still work it out with time. Similarly, models make more reasoning errors when trying to answer right away, rather than taking time to work out an answer. Asking for a “chain of thought” before an answer can help the model reason its way toward correct answers more reliably.

Tactics:

- Instruct the model to work out its own solution before rushing to a conclusion

- Use inner monologue or a sequence of queries to hide the model’s reasoning process

- Ask the model if it missed anything on previous passes

Use external tools

Compensate for the weaknesses of the model by feeding it the outputs of other tools. For example, a text retrieval system (sometimes called RAG or retrieval augmented generation) can tell the model about relevant documents. A code execution engine like OpenAI’s Code Interpreter can help the model do math and run code. If a task can be done more reliably or efficiently by a tool rather than by a language model, offload it to get the best of both.

Tactics:

- Use embeddings-based search to implement efficient knowledge retrieval

- Use code execution to perform more accurate calculations or call external APIs

- Give the model access to specific functions

Test changes systematically

Improving performance is easier if you can measure it. In some cases a modification to a prompt will achieve better performance on a few isolated examples but lead to worse overall performance on a more representative set of examples. Therefore to be sure that a change is net positive to performance it may be necessary to define a comprehensive test suite (also known an as an “eval”).

Tactic:

Tactics

Each of the strategies listed above can be instantiated with specific tactics. These tactics are meant to provide ideas for things to try. They are by no means fully comprehensive, and you should feel free to try creative ideas not represented here.

Strategy: Write clear instructions

Tactic: Include details in your query to get more relevant answers

In order to get a highly relevant response, make sure that requests provide any important details or context. Otherwise you are leaving it up to the model to guess what you mean.

| Worse | Better |

| How do I add numbers in Excel? | How do I add up a row of dollar amounts in Excel? I want to do this automatically for a whole sheet of rows with all the totals ending up on the right in a column called “Total”. |

| Who’s president? | Who was the president of Mexico in 2021, and how frequently are elections held? |

| Write code to calculate the Fibonacci sequence. | Write a TypeScript function to efficiently calculate the Fibonacci sequence. Comment the code liberally to explain what each piece does and why it’s written that way. |

| Summarize the meeting notes. | Summarize the meeting notes in a single paragraph. Then write a markdown list of the speakers and each of their key points. Finally, list the next steps or action items suggested by the speakers, if any. |

Prompt Engineering Examples:

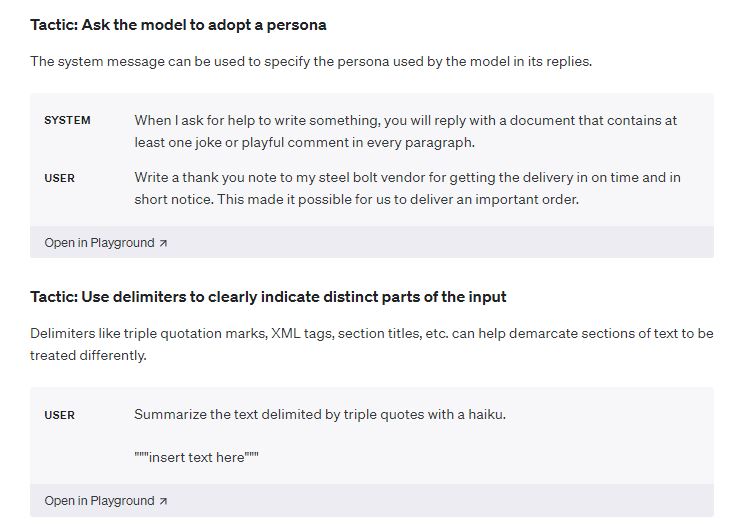

Tactic: Ask the model to adopt a persona

The system message can be used to specify the persona used by the model in its replies.

Tactic: Use delimiters to clearly indicate distinct parts of the input

Delimiters like triple quotation marks, XML tags, section titles, etc. can help demarcate sections of text to be treated differently.

You can read the rest of the prompt engineering examples at OpenAI.com

Below is a prompt engineering tutorial from Anu Kubo about how to get ChatGPT and large language models (LLMs) to give you perfect responses.